Key Takeaways

- Adoption vs. Visibility: While 83% of enterprises use AI , a mere 13% report having strong visibility into how it interacts with their data.

- The Identity Crisis: AI is being treated like "just another user." Only 16% of organizations treat AI as a distinct identity class , which helps explain why two-thirds have already caught AI tools accessing more data than necessary.

- Pervasive Governance Gaps: A mere 7% of companies have a dedicated AI governance team , and only 11% feel fully prepared for emerging AI regulations.

Digging deeper into the 2025 State of AI Data Security Report from Cyera reveals just how wide these gaps are:

🔹 Stuck in Pilots: While AI use is widespread, most organizations remain in early stages; 55% are limited to pilot programs or limited use cases, and only 28% report extensive adoption.

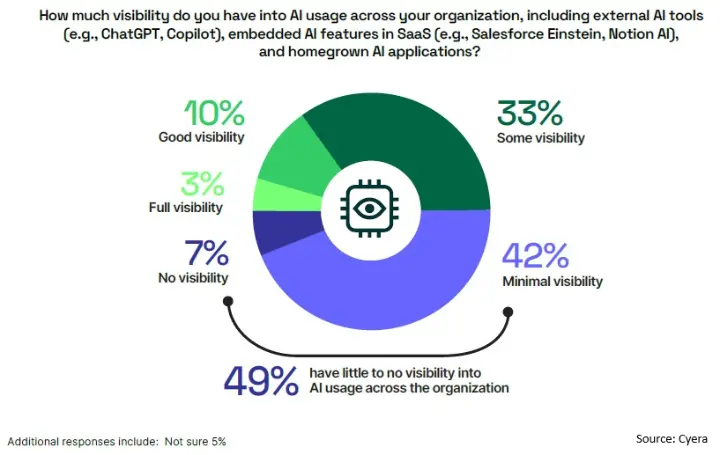

🔹 AI Blind Spots: Nearly half of organizations (49%) admit to having little or no visibility into AI usage across their enterprise.

🔹 Reactive Monitoring: Monitoring is often treated as a forensic tool rather than a defense mechanism. A third of organizations review AI activity logs only after an incident occurs, and just 9% monitor them in real time.

🔹 Inability to Intervene: More than half of enterprises (57%) cannot block or restrict risky AI activities in real time. A third (33%) are aware of misuse but have no controls to stop it.

🔹 Missing Prompt Guardrails: Specific controls at the prompt and output layer are weak. Only 41% filter risky inputs, and just 26% redact or mask sensitive data in AI outputs.

🔹 Fragmented Ownership: Responsibility for AI governance is scattered. For most, ownership is shared (34%), placed with the CIO (17%), or assigned to the CISO (12%), leading to potential gaps.

🔹 Shadow AI: 40% of organizations acknowledge that unsanctioned "shadow AI" tools are already operating within their environment, outside of official oversight.

🔹 Permissive by Default: Over-access is a significant issue. 21% of organizations grant AI systems broad access to sensitive data from the start.

🔹 Siloed Defenses: Data security and identity governance are rarely connected. Only 9% of organizations have fully integrated these controls for AI, while 39% admit they operate completely separately.

The report is a wake-up call. We need to decide if the AI we're deploying is a strategic advantage or an unmanaged liability.

What is the first step your team is taking to close this critical AI readiness gap?

#AIDataSecurity #Cybersecurity #ArtificialIntelligence #AIGovernance #DataGovernance #CISOs #TechLeadership #RiskManagement #IAM #LLMSecurity

🔎 How prepared are companies for the data security risks of AI?