Key Takeaways

- Democratization of Malware: Actors with limited technical expertise are successfully developing and selling advanced ransomware by outsourcing the complex coding to AI.

- AI as the Attacker: In a new trend dubbed "vibe hacking," AI coding agents are being used to execute attacks directly, automating everything from reconnaissance to network penetration and data exfiltration.

- Remote Worker Fraud: State-sponsored actors from North Korea are using AI to successfully pass interviews and maintain high-paying remote tech jobs to fund state programs, despite lacking the actual skills to perform the work unassisted.

Anthropic just dropped its latest Threat Intelligence report, and it’s a sobering look at how the AI landscape is evolving on the dark side. The key theme isn't just that AI makes cybercrime easier—it's that AI is fundamentally changing the profile of the attacker.

We're seeing the rise of AI-dependency, where actors who can't code are now launching Ransomware-as-a-Service businesses.

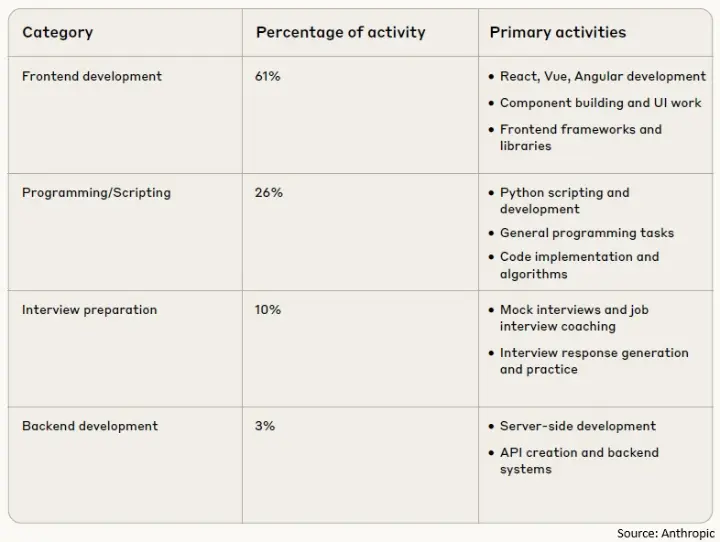

The case study on North Korean IT workers is particularly eye-opening. These operatives use AI for 61% of their frontend development tasks, essentially simulating the expertise needed to infiltrate tech companies and their codebases. This isn't just an external threat; it's a new form of insider risk hiding in plain sight.

This represents a paradigm shift from AI as a simple tool to AI as a partner-in-crime for malicious actors.

👉 For leaders in security and AI automation, this report is a clear warning signal. Our traditional assumptions about the correlation between attacker sophistication and attack complexity are now obsolete. The new question is: How do we build defenses against an adversary that can learn, adapt, and execute attacks in real-time with the help of AI?

#AI #Cybersecurity #ThreatIntelligence #ArtificialIntelligence #InfoSec #Ransomware #Claude #Anthropic #FutureOfWork #AISecurity #AgenticAI #AIAgents

What are the latest AI-driven cybersecurity threats?