Key Takeaways

- Prompt injection exploits how AI agents interpret language instructions, blurring the line between developer intent and user input.

- It’s persistent, if not permanent: OpenAI notes prompt injections may never be fully solved but can be continuously mitigated.

- Defense requires proactive strategies, including adversarial training, rapid patching, monitoring, and governance controls.

Prompt injection isn’t a theoretical threat; it’s foundational to how LLM-based systems work. Because modern AI models treat every piece of text as potential instruction, a malicious prompt hidden in a webpage, document, or email can override your system’s intent and induce harmful behavior. That could mean leaking sensitive data, misconfiguring workflows, or even compromising downstream systems.

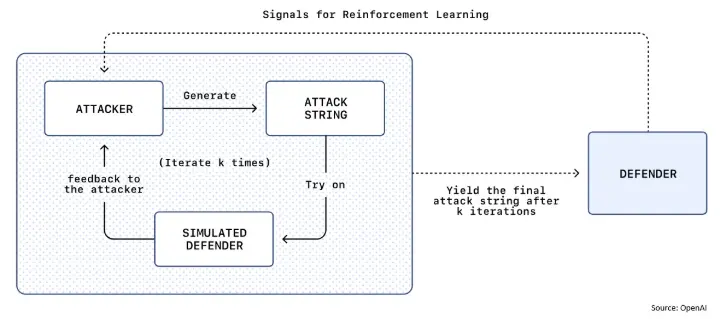

OpenAI’s recent defense work on ChatGPT Atlas highlights both the scale of the problem and how the industry is responding. They’ve built an automated rapid response loop that continuously hunts for new injection techniques and ships mitigations faster, combining red-teaming with adversarially trained models and system safeguards. But even with these layers, they acknowledge prompt injection is a long-term AI security challenge, not a checkbox item.

For enterprises moving AI agents into production, here’s what you need to know:

1️⃣ Detection matters more than prevention: No defense is perfect. Plan for incidents and mitigations, not just prevention.

2️⃣ Limit access when possible: Limit agent permissions and treat external data sources with caution.

3️⃣ Human in the loop: Critical decisions should still require human confirmation. Always review such requests carefully and do not assume the agent is doing the right thing.

4️⃣ Give agents explicit instructions when possible: Avoid overly broad prompts with wide latitude that makes it easier for hidden or malicious content to influence the agent.

5️⃣ Invest in dedicated solutions for prompt filtering and abuse detection: Agentic AI vastly expands the security threat surface and that defense requires continuous investment, not a one-time fix.

🔍 Strategic takeaway: As AI autonomy grows, so does the attack surface. Prompt injection underscores that AI security isn’t just about models; it’s about governance, detection, and continuous adaptation. Embrace defense as a process, not a product.

#AIsecurity #GenAI #PromptInjection #AIGovernance #AIAgents #Cybersecurity #EnterpriseAI #RiskManagement #PromptDefense

What is prompt injection, why is it dangerous, and how can enterprises defend against it?